|

|---|

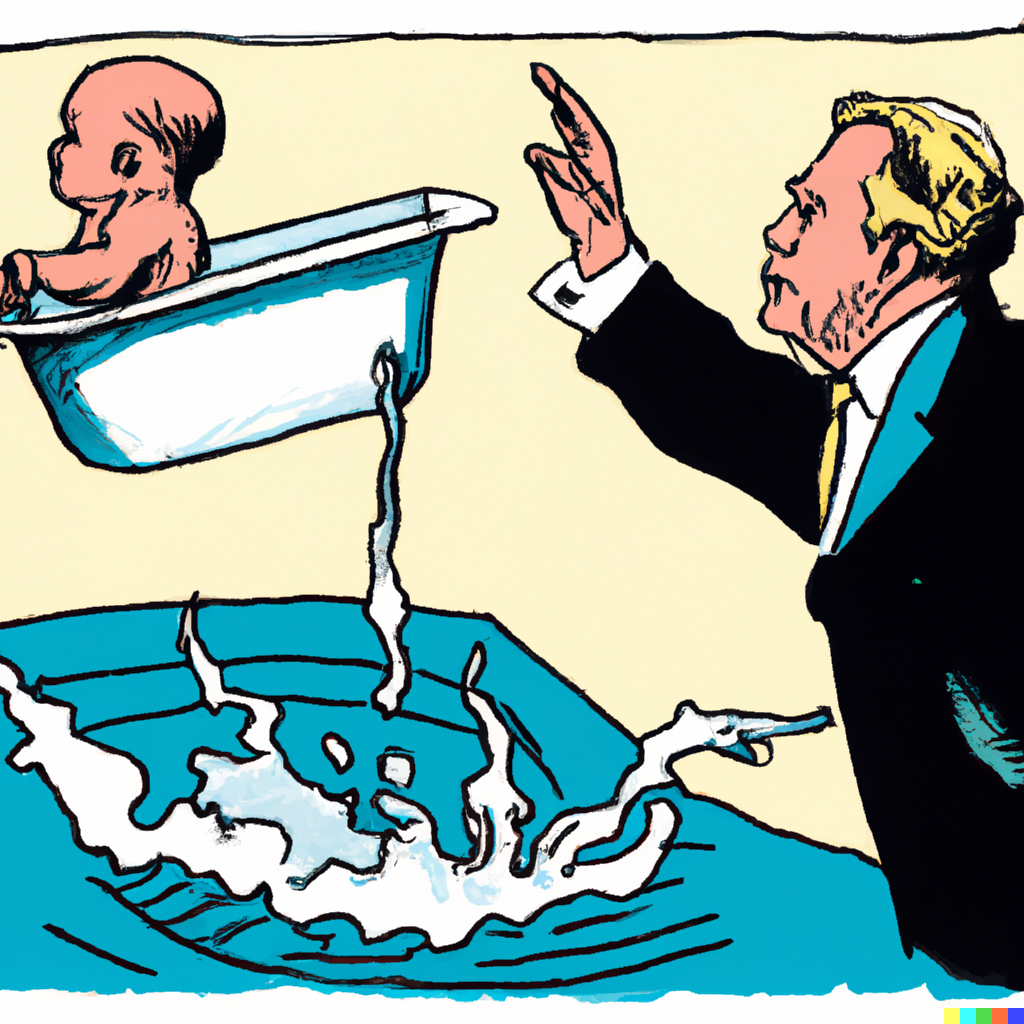

| Image generated with DALLE-2 |

Making sure the children in our society do not regularly stumble upon damaging material while browsing the internet, is something we can all get behind. Our UK government agrees. Back in 2016, a study commissioned by the National Society for the Prevention of Cruelty to Children (NSPCC)[shorter initialism pending] and the Office of the Children’s Commissioner (OCC) surveyed 1001 children and young teens about their experience of internet pornography. The survey suggested that about half had seen pornography online, with the first exposure being most likely accidental.

In response, the government passed into law an age-verification provision to the Digital Economy Act 2017. This solution will require, among other things, that commercial publishers of online pornography verify that the consumers of their products are 18 or older. To enforce such a requirement, a UK age-verification regulator will be created, empowered with the ability to impose fines of £250K or 5% of annual turnover (whichever is larger) on any publisher that the regulator deems to be contravening the law. This is a bigger stick than that wielded by GDPR. Further, it is clear in the letter of the law, that a self-declaration of age is insufficient as age-verification. On the face of it, this is not that bad, but how do they plan to check our age?

In practice, age-verification will require a technological solution to be deployed at scale. The government is leaving it up to each publisher to implement age-verification. This is the problem. Any form of age verification will ultimately require the consumer to divulge private information about themselves, information that ties their identity to their viewing habits, potentially over time. This could result in the creation of a large, potentially national, database linking real, legally verified, identities to porn viewing habits. This is a possibility, not a certainty. So what’s the problem then?

Well, let’s replace “online age-verification”, with something a bit more relatable: getting your ID checked when buying alcohol. But instead of the shopkeeper just checking your ID, she hires a third party, a professional ID checker to do it for her. Every time you want to buy booze, the shopkeeper takes your ID, sends it to this professional via the mail, gets a response back, and then sells you the product (or probably tells you about the £10 card limit). What if there was another professional that charged half as much as the first? Clearly this one would be the preferred choice of our economically aware alcohol guardian. But how could it be cheaper? well here is where it starts to get interesting. The second professional could, for example, employ a cheaper way to get your ID sent to them, maybe it gets sent via a third party. Another way to cut costs is advertising, they could get your consent to use your drinking habits for marketing purposes. Now we can just replace “shopkeeper” with “online porn publisher”.

The bigger publishers will all be able to afford the more privacy-friendly of the age verification technologies, those where a database is not part of the equation. However, because the letter of the law does not explicitly require this, we end up with the very real possibility that the smaller publishers will shop around for the cheapest way to adhere to regulation. This is what opens the door for the hybrid advertiser / age verifier company.

To make matters even more concerning, consider that the internet is the type of place where the likelihood of emergence of only a handful of very big age verifiers outweighs the alternative — you won’t find many local mom and pop stores offering age verification. To see why, consider that the average user would not want to verify their age multiple times in one .. umm.. session. Instead they would rather have to only sign-in to an age verifier once, and then continue browsing as many sites as they wish (invariably with the eyes of the age verifier over their shoulder). Therefore, the more sites use a particular age verifier, the more attractive that age verifier becomes to other sites and so on. Markets exposed to this kind of network effect end up being a welcome environment for winner-take-all dynamics and tend to foster a few entities gaining an enormous advantage over smaller players (for more, see this analysis by the venerable David Evans).

All of this creates the conditions for the emergence of a firm constructing the databases we’ve been talking about, and for those to become very big databases indeed. But advertisers already have these databases in one form or another, so what’s the big deal? The key difference between normal online tracking and this version is that these age verification services will have direct access to our real identities as opposed to a random-looking advertising ID. But wait, even if they have this database online, we are protected by privacy regulation right? Yes, in a perfect world.

There are two major issues if such a database existed at a national scale: the risk of leaks and the slippery slope into mass government surveillance. Over the last few decades, data leaks have been a repeating nightmare. From 2005 to 2018 alone there were 37 major incidents, many involving the personal data of millions of individuals. These involve tech-savvy companies such as Facebook, Google, Equifax, and even Reddit, that all famously recruit some of the most talented and competent staff the industry has to offer.

The more systematic risk posed by a national online database with the porn habits of the people, is that the state could, in one way or another, use these habits against the public on ideological grounds. The Digital Economy Act itself already includes language that suggests the policing of porn habits (e.g. multiple references to “extreme pornographic material” paragraph 21.1.b). The creation of a regulator to police such things is uncomfortably close to a moral or cultural police that exists in many countries that the UK routinely chastises over human rights abuses.

Children and young teens require, deserve, and are entitled to protections against damaging content online and offline. However, stumbling upon porn online, is not a risk that requires a response on the scale of enforced national age-verification. One could argue that better sex education, home Wi-Fi filters controlled by parents, or even more elaborate versions of self-declaration of age are all much more appropriate responses to the risk under discussion. As of now, the law is not going to come into effect until at least the winter of 2020, but that date will arrive. Before then, a debate on the Digital Economy Act 2017 needs to be re-opened, otherwise we risk throwing our privacy out with the bathwater.

(Disclaimer: The views expressed here are my own and don’t reflect those of anyone or any institution that I may be associated with in the real world.)